Last summer, when I was down in Florida for a NASA internship, I was challenged by my housemates to a summer code-off. The goal? To build the coolest piece of software in a mere 10 weeks. Having recently begun exploring the world of Twitter bots, and disappointed that there was no existing library to make Twitter API queries from C, I resolved to spend my summer building a library to do just that.

And now, 6-and-a-bit months later, in the dead of winter, I finally finished. Behold, Tweet With C!

Download

This might seem kind of nuts -- who would want to use twitter from such a low level language? Well, it's not as nuts as you might think. I envisioned two major use cases when I began this project:

1. Code that wraps this library in a higher level language (many languages have compatibility layers for working with native/C functions).

2. Projects that want to interact with twitter without using one of the potentially-heavier libraries that exists in their native language, or are written in languages with no existing twitter API library, should be able to use the above to leverage this library.

Essentially, I'm using C because it's the

lingua franca of the modern software world, and no one else as far as I've seen has filled this niche yet. And, of course, there might be some enterprising individuals who wish to use the library from other C code.

Because of my choice of language, I am not afforded many built-in tools for making expressive code. Past efforts to endow C with higher-level features are well documented elsewhere, but for this project I wanted to see what I could come up with that would:

- Reduce the amount of code that had to be written

- Automate the process of keeping the library up to date with changes to the Twitter API

- Make code more readable and/or more understandable

- Use only mechanisms built into the C programming language as of C99 or provided by the most commonly used tools in the C community

- Avoid adding unnecessary dependencies to the project

The best solution I found to fit these constraints was a custom code generator. Also written in C.

So in this post, I'll take you on the magical adventure through metaprogramming that I've been riding on-and-off since late this summer. Hang on to your cosmetic headwear.

Part 0: The Tools We've Got

Before I ever started writing the custom preprocessor/code generator for this project, I built for myself a couple things that proved quite helpful while developing the library. Since they fall a bit into the 'metaprogramming' territory, I thought they would be valuable to bring up here.

Generics

It's not quite as 'fancy' and 'turing-complete' as C++'s templates, but it's actually not too difficult to fake type-generic structures in C:

| // Buffer types (essentially a block of memory with a size)

#define twc_buffer_t(T) struct { size_t Size; T* Ptr; }

typedef twc_buffer_t(void) twc_buffer;

typedef twc_buffer_t(char) twc_strbuf;

typedef twc_buffer_t(const char) twc_string;

|

For my purposes, I only really needed to use this to make a few concrete data structures which then had functions over them defined the usual way. There are a couple ways you could define type-generic functions, but they can get convoluted and ugly very quickly (or aren't type safe / very useful). Such is life in a procedural language from 1971.

Option types

I used ML for a programming languages class for a bit and one thing I quite liked about it was its notion of 'option types'. Essentially, an `T option` is just like a `T` except it can also have the value `NONE`. The fairly straightforward translation to C is a struct that holds a bool indicating whether or not the option is set, and the value. My trick for doing generic-like types above works well for this:

| #define twc_option_t(T) struct { bool Exists; T Value; }

|

I adopted the convention of appending a `$` character to the end of these type

names to signify they were option types:

| typedef twc_option_t(bool) bool$;

typedef twc_option_t(int) int$;

typedef twc_option_t(float) float$;

// ...

|

I also defined a few helper macros to facilitate creating and taking apart these types:

| #define TWC_NONE(T) (T){ .Exists = false }

#define TWC_SOME(expr, T) (T){ .Exists = true, .Value = expr}

#define TWC_OPTION(cond, expr, T) ((cond) ? TWC_SOME((expr), T) : TWC_NONE(T))

|

This is also one of the only parts of the project, to my knowledge, that _requires_ C99 (stdbool aside). If at some point in the future I want to try to make it all C89 compatible, I may have to either make these real functions, or multiline macros, neither of which strikes me as particularly appealing.

Alright, onto the main event.

Part 1: The Metadata

I knew pretty much from the get-go that I was going to need some metaprogramming on this project, because I wanted the library to have an interface that was slightly more advanced than a function taking strings for the URL and parameters. That is, I wanted to wrap the 100-some API calls in appropriately-typed function calls with appropriately-typed parameters and be able to keep that up to date pretty much on my own if I have to. So writing all of that by hand was out of the question.

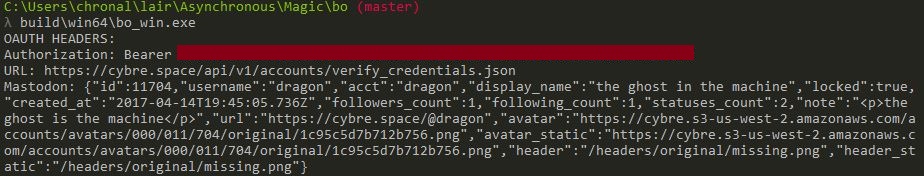

However, it wasn't until I had the basics of the library down that I knew the specifics of what I'd have to generate. Once I'd implemented the OAuth signing and wrote out a couple function wrappers for the most common API calls -- `account/verify_credentials` and `statuses/update` -- it was pretty clear that the process was going to be incredibly formulaic.

Essentially, for any given API endpoint, I needed the following pieces of information to write the corresponding function:

- Endpoint path, e.g. `/statuses/update.json`

- List of parameters

- `GET` or `POST`?

Then, the code I needed to write for that endpoint would consist of a struct encompassing the parameters:

| typedef struct {

// <type of param 1> <Param 1 Name>;

// ...

// <type of param N> <Param N Name>;

} twc_<endpoint path>_params;

|

And a function taking that struct which compiles the members into a linked list of strings (on the stack, because it doesn't need to persist beyond this call):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16 | extern twc_call_result

twc_<Endpoint Path>(twc_state* Twitter,

twc_<endpoint path>_params Params)

{

twc_key_value_list ParamList = NULL;

// <Serialization based on the type of param 1>

ParamList = twc_KeyValueList_InsertSorted(&ParamList, <serialized param 1>);

// ...

// <Serialization based on the type of param n>

ParamList = twc_KeyValueList_InsertSorted(&ParamList, <serialized param n>);

return twc_MakeCall(Twitter, <relevant POST or GET value>, <relevant URL>, ParamList);

}

|

(And of course, the corresponding function header stub to go in the header for #include purposes)

So my work was cut out for me.

Part 2: The Data

Before I could generate anything, I needed a way to reason about the twitter API in a programmatic way. Unfortunately, as I found out when I

asked on their forums, there was no publicly available schema. The only resource for finding out about the API is the web docs.

Crawling HTML for bits of information is not much fun even in languages that do it well, and for a language like C where I'd have to bring in at least one additional library to parse the HTML, it seemed like it would quickly become a nightmare. So I took another tack.

I relaxed my requirements slightly and wrote the web docs crawler in python, supported by the `requests` and `beautifulsoup` libraries. My reasoning was, if I could produce a normalized, machine-readable format, it would definitely be helpful to people other than myself. I won't go on at too much length about this part, because that repository is already

public on GitHub.

Once I had the docs in JSON format, I was ready to consume them in C. Now, C doesn't have built-in support for JSON (considering it predates JavaScript itself by a couple decades) but there are a number of libraries for this sitting around.

Sean Barrett has made a

list of single-file libraries that includes several good ones.

However, I had in fact already written a JSON parser for myself previously as part of the development of this web site that was almost entirely C compatible. It seemed fitting to use that instead of an extra dependency, even if that dependency would be pretty easy to include, because I would be able to control the API and make changes or additions as they became necessary here. So I copied my JSON parser into the project, removed the few C++ features that were being used, and refactored the data layouts a bit so that it could predict the amount of memory it would use to parse a given document.

Once that was done, I started writing out the code that would turn a parsed JSON document into a more usable set of data structures. The structs I defined ended up more or less precisely the same as the layout of the `api.json` I output from Python, with a couple of additions:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25 | // Twitter API endpoint descriptions

typedef struct

{

twc_string Name;

twc_string Type;

twc_string Desc;

twc_string$ Example;

bool Required;

twc_string FieldName;

} api_parameter;

typedef struct

{

twc_string Path;

twc_string$ PathParamName;

twc_string Desc;

twc_http_method Method;

bool Unique; // Whether any other endpoint has the same path but different HTTP method

int ParamCount;

api_parameter* Params;

} api_endpoint;

|

Going from the parsed JSON to this is fairly trivial. I will, however, highlight a couple interesting roadblocks, corresponding to new parameters on the structs.

1. `FieldName` -- I use a certain `Capitalization.Style` in my code, and I wanted generated code to match. Also, I didn't want any fields to inadvertently shadow variables or macros from elsewhere, so I generated this string out of the twitter parameter names to use in the produced code.

2. `Unique` -- Since I'm in C, I obviously can't overload functions with multiple sets of parameters. But there are some cases where the twitter API has a GET and a POST version of the same endpoint. In these cases, I wanted my generator to append Get or Post to the name of the generated function.

3. `PathParamName` -- This was a 'fun' one. About 3/4 of the way through writing the library I noticed/remembered that some of the twitter API functions accept URL slugs, like `statuses/destroy/:id.json`. Argh. For these functions, serialization must be different, since the parameter is expected to be inserted into the very URL used to make the query. More on that later.

Alright, that's the data. So how do I get from data to code?

Part 3: The Code

My first impulse was to simply switch on the type right there in the code generator and spit some strings out into the generated document. Something along the lines of:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16 | switch (Param.Type) {

case "twc_string": {

printf("twc_key_value_pair %s_KV = twc_KeyValueStr(%s, %s);", FieldName, ParamString, ParamName);

printf("ParamList = twc_KeyValueList_InsertSorted(ParamList, &%s_KV);", FieldName);

} break;

case "int": {

printf("twc_key_value_pair %s_KV;\\n", Param.FieldName);

printf("char Buf[13];\\n");

printf("memset(Buf, 0, sizeof(Buf));\\n");

printf("twc_SerializeInt(%s, Buf);\\n", Param.FieldName);

// ...

} break;

// ...

}

|

As you might expect, this got very unwieldy very fast. Dealing with the code I wanted to generate directly as inline string literals was error prone and hard to manipulate. I want syntax highlighting, damn it! But I had another idea.

I don't know where I originally saw this, but I'm fairly sure I copied it from someone. The idea is, have certain segments of code in a source document flagged as being "templates" (in the traditional sense of the word, and not the C++ sense) for the production of code that serializes a certain type. Then have certain sentinel values inside that "template" that are replaced by a relevant name when the final code is output. For example:

| twc_param_serialization(twc_string)

{

twc_key_value_pair @FieldName@_KV = twc_KeyValueStr(@ParamString@, @ParamName@);

ParamList = twc_KeyValueList_InsertSorted(ParamList, &@FieldName@_KV);

}

|

This template is read in by the code generator, which is equipped with a decent C tokenizer. It builds a list of all the templates it finds, tagged by which type they are used to serialize. Then, when I need to go through and produce the final function corresponding to an API endpoint, I just look up the template corresponding to each type in the parameter list, and paste that into the final document.

As a side note, I also have another kind of template (specified by `twc_url_serialization`) for parameters that need to end up in the URL. This is just because the local variables that need to be updated are different. One thing I might pursue is separating out the bare minimum bits necessary to serialize a given type, and then generate the stuff that goes around it to e.g. update the parameter list or fill in the URL slug, because there's a fair bit of repetition between templates.

To give an example of how this works in practice, for `statuses/update.json`, I produce a struct creatively named `twc_statuses_update_params` that houses all of the optional parameters that you could pass to the Twitter API, and the following function definition:

| extern twc_call_result

twc_Statuses_Update(twc_state* Twitter,

twc_string Status,

twc_statuses_update_params Params);

|

Then, when I need to generate the code that serializes all of the parameters (including the required `Status`) for sending off to cURL, I get something that looks like the following:

1

2

3

4

5

6

7

8

9

10

11

12

13

14 | extern twc_call_result

twc_Statuses_Update(twc_state* Twitter,

twc_string Status,

twc_statuses_update_params Params)

{

twc_key_value_list ParamList = NULL;

twc_key_value_pair Status_KV = twc_KeyValueStr("status", Status);

ParamList = twc_KeyValueList_InsertSorted(ParamList, &Status_KV);

// ... All of the other params

return twc_MakeCall(Twitter, 1, TWC_URL_STATUSES_UPDATE, ParamList);

}

|

This means that the surface area for updating the code when Twitter updates their API is *very* small. In fact, code changes only ever need to be made for the following reasons:

1. Twitter adds a function that takes a new type of data. (This is not a pressing concern, because it will only become apparent when I update my API schema script to correctly identify this new type -- otherwise, it'll just default to 'string', which delegates serialization to the library user)

2. I find a bug with the way a certain type of parameter is serialized. In this case, I fix it in one place (the template), and every single place that type of parameter appeared, the bug will be fixed.

That's it!

My overall return on investment on this has been very good. The code generator is around 800 LLOC, all of the templates together are about ~150 LLOC, and the produced header and C file are just over 4000 LLOC. 25% is a pretty awesome compression ratio, if you ask me!

Part 4: The Conclusion

The downside to all this is that it's very difficult to test. A future direction I want to pursue is automatically generating test cases for each API endpoint, to make sure that there are no unexpected crashes or API errors returned. I'm trying to maximize the robustness and flexibility that I can give myself if I'm the only one maintaining the project. But who knows, maybe someone else will be interested and dedicated enough to help out?

For now, you can download the library from [Github](

https://github.com/Chronister/twc) and try it out for yourself (Makefile provided for Linuckers, batch file for Windowheads). I'm also working on a command-line twitter client to allow for quick-n-easy tweeting (think `tweet "CLI is dead, long live CLI"`), so keep your eyes peeled for that.

Between developing stuff in C and taking way too many classes at university, I also r/w on twitter dot com as

@chronaldragon so if you have any feedback or comments for me, please @ me or leave them in the comment box below.

Thanks for reading!