Hello,

in my music player project (https://mplay3.handmade.network/) I extract all metadata from all .mp3 files that are in a user-defined folder.

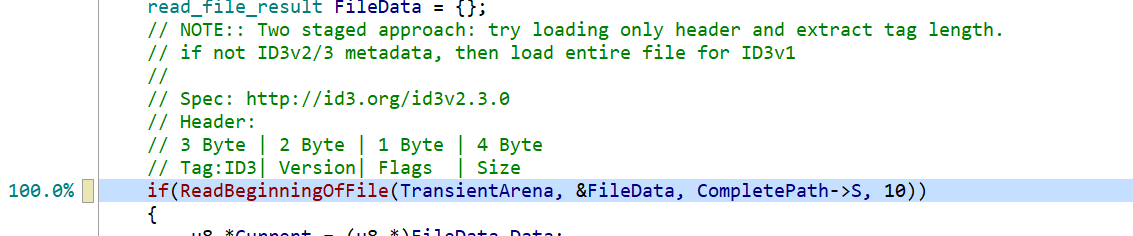

The problem is, it takes a really long time for windows to access these files, as shown in this image, 100% of the time is spend in CreateFileW.

And I only load the first 10bytes of the file!

The thing is, when I run it a second time, the duration _drastically increases. Now it only takes 122µs!

Because of that, I am not sure if there is anything I can do. In my music library I have 8k songs and it takes a few minutes to extract all metadata the first time, the second time it only takes ~6 seconds.

Maybe someone knows if there is anything to be done. I could not really find any good information about this. So I am trying here.

Thanks in advance for any information/help!

-TJ Dierks

Unfortunately filesystem drivers on Windows are not the fastest thing out there. They have significant overhead compared to modern filesystems on Linux. They also offers way for other drivers to hook into various operations to filesystem thus slowing down everything even more without you having any control over that.

WdFilter.sys in your screenshot is Windows Defender antivirus/antimalware scanner. Disabling that should give you significant speedup.

The best alternative you can do is heavily multithread your filesystem operations. Modern SSD's can easily handle many simultaneous filesystem accesses. This way you can hide individual file access latency behind many-parallel requests to filesystem. For things like scanning headers/metadata of files this should be very noticeable speedup. You should do 2x or more I/O background threads for small reads like this than actual core count in your system.

At work we have similar problem where compiling a lot of tiny files increases build time significantly just because there is bad antivirus driver intercepting filesystem calls. There's no easy way around it (except turning such software off).

Thank you for the quick response! mmozeiko you are really a treasure! Not only do you seem to know everything, you're also super fast!^^

I actually already do the file scanning multi-threaded, but only like a handful of threads. Because during development of that threading, I was testing with the same files all the time. Them being 'hot' made it look like just a few threads were a bit more effective than many smaller. I did not think about the fact that it may be useful on a first run to have more smaller threads as they actually just wait for windows a bunch. I will try that out, thanks a bunch!

Another related thing. Is there a way to 'unvalidate' the files again after having them opened once? Because it is really annoying to test, as only the very first run takes long, after that, verifying results is not possible anymore.

There's no builtin utility for that. But you can use this API function: SetSystemFileCacheSize If you call it with (SIZE_T)-1, (SIZE_T)-1 arguments then it will clear file cache. It requires admin rights, so right click -> run as administrator, or run from admin cmd.exe.

Here's example how to call it: https://gist.github.com/mmozeiko/2d455bf4e9ba02e69365e7cc63f4df2f?ts=4

In my framework, I separate saving and loading of byte buffers from parsing and generation of their file content. When you have an already generated file in a buffer of known size, you only call the system once to write the whole memory block at once. On Windows, this makes the difference between waiting five minutes (too many system calls), and not even noticing the delay (a single write).

For streaming huge files or live broadcasts via User Datagram Protocol, you can do the buffering in larger chunks of data, depending on what is considered responsive enough.