Added another planned optimization to the Sandbox example. Using dirty rectangles, I can avoid redrawing the height, diffuse and normal background where nothing has moved. I worried that the frame-rate would feel uneven because it increases the difference between worst and average case, but at these speeds, a one fourth's frame's jitter is lost to tearing against the screen's 144Hz refresh rate anyway.

Results

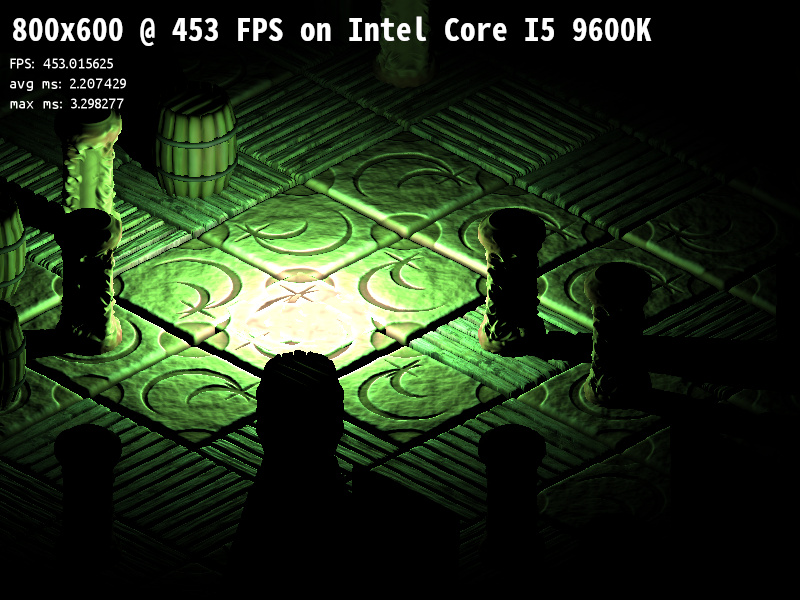

Reached 453 frames per second instead of 295 for 800x600 pixels. With 185 FPS for 1920x1080 pixels, I'm pushing the limits of my 144Hz gaming monitor and the extra fast DisplayPort cable.

Might sound like overkill

but getting people to use CPU rendering again requires some sick performance to be convincing, because the GPU's only advantage is performance. More optimization can be done by computing deferred light using a smaller buffer one block at a time. It's currently a naive implementation thrashing lots of cache memory. These blocks would also allow skipping light sources based on early light culling tests.

What to do next

Ray-tracing cards use parallelism to acheive soft shadows by letting each pixel have another light direction. With a CPU's higher frequency, one can go for higher speeds instead and let light sources shake faster than the eye can see and then smooth out the result using temporal motion blur. A sliding window integral implementation would allow creating stable distance adaptive shadows using a pre-defined set of light offsets. As long as the light being subtracted has the same relative offset as the new light being added, the result should look stable without the need for noise reduction filters. An exponentially fading temporal blur would be cheaper, but the perceived smoothness is relative to the most visible frame.

Screen-space ambient occlusion can be stored relative to the world using the same technique as the background. By only updating ambient light around places where something moved, the cost will be close to nothing for soft shadows.

Once I'm done optimizing, this will be enough to challenge Nvidia's latest RTX cards in terms of resolution, frame-rate and visual quality.