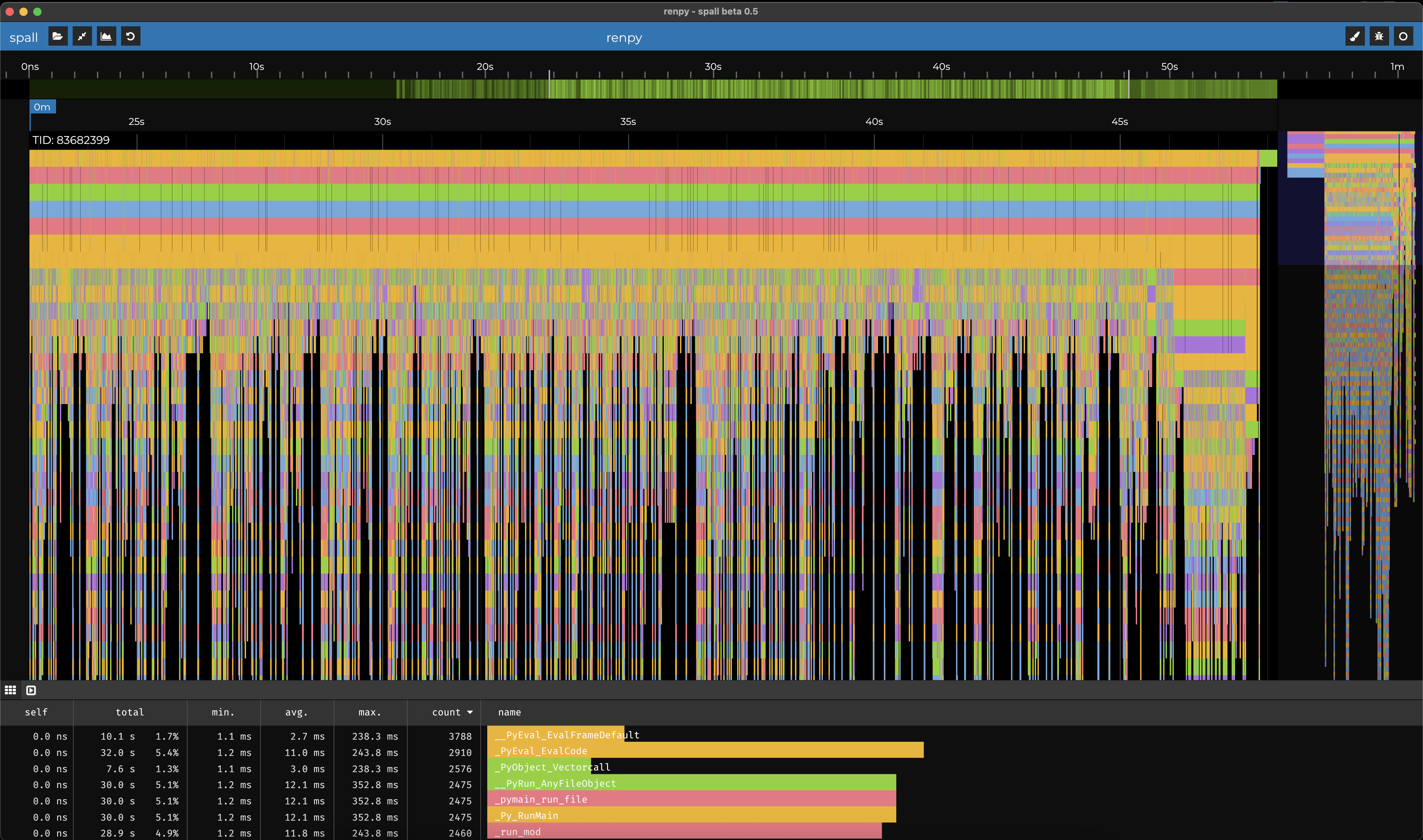

&spall Messed with auto-tracing LuaJIT this evening, getting back into Spall after a bit of a hiatus.

local samples = 1000000

local T = {}

local CachedTable = {"abc", "def", "ghk"}

local function test1(times)

T[times] = CachedTable

end

do

for warm = 1, samples do

test1(warm)

end

end

Interesting to note, for simple bench tests, the more loop iterations you have, the longer the JIT seems to take to kick in.

for 100,000 iterations it takes >1ms to move to JITted code

for 1,000,000, it's 8ms

and for 10,000,000 it's ~60ms

The actual asm-gen part of LuaJIT is incredibly zippy. ~50-100us here

(the spiky part)